How potch.me Works

I claimed at some point I'd talk about the code that powers this website, and seeing as it recently underwent a minor renovation, now's as good as any a time to pop the hood! I, like so many developers, suffer from the "roll my own" website affliction. This is a chronic, and often terminal, diagnosis; but largely benign overall other than the deleterious effect on post rate, as the temptation to tinker is right there. I think one's own online home is a great testbed for trying things out, provided the end state is a good DX for yourself to use. Alas, the cobbler's children are frequently unshod. For evidence, see also: this website.

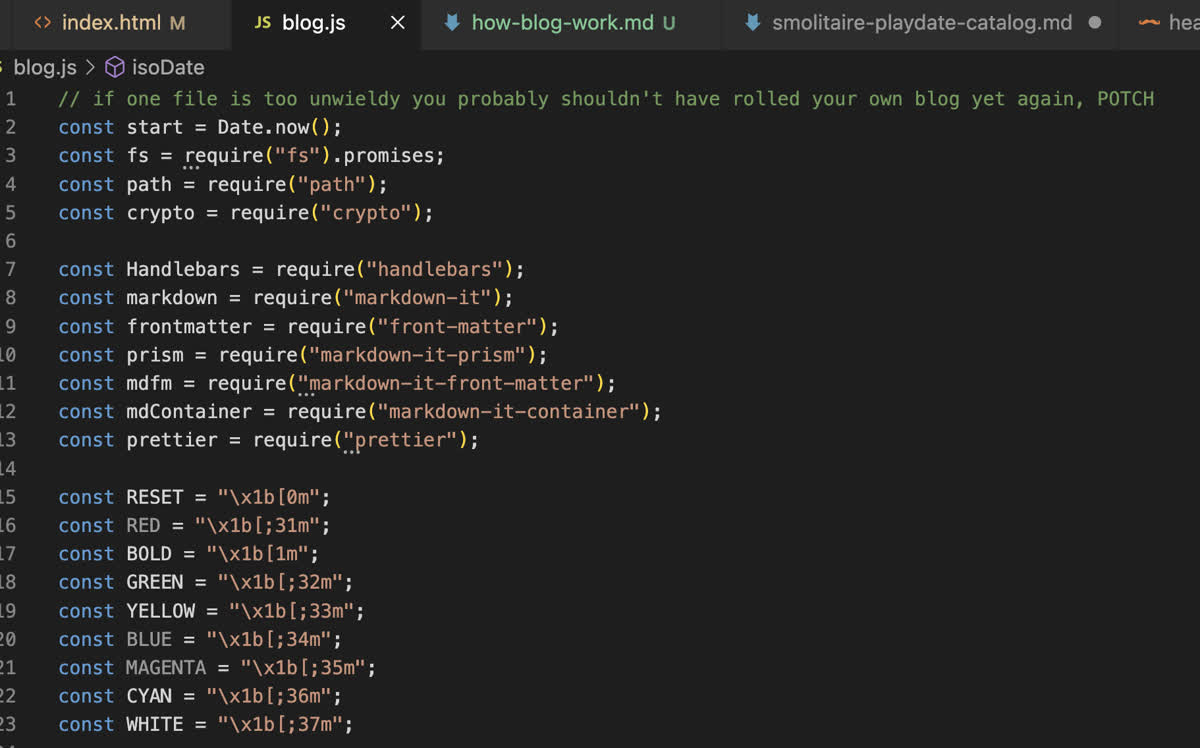

All of this to say that this website is powered by a custom, one-file (plus dependencies) static site generator. As input: a folder of files consisting of markdown, HTML, handlebars templates, and assorted images, styles, and scripts. The output is a folder, matching the input's folder structure, with markdown files rendered into HTML using said handlebars templates, and all other files copied over as-is. All of that takes fewer than 600 lines of code, if you don't count dependencies, and why would anyone in frontend count the dependencies!!

Oh, we have fun.

The process script is run via

onchange when the input

directory changes. A sample log of an iteration looks something like this:

[blog] onchange: src/2023/how-blog-work.md

[72ms +72ms] loaded

[73ms +1ms] indexing

[114ms +41ms] building tree

[114ms +0ms] diffing

[blog] 5 changed items

[115ms +1ms] building

[blog] Executing 6 tasks

[render] 2023/how-blog-work.html

[render] index.html

[render] rss.xml

[render] tag/js.html

[render] tag/frontend.html

[render] tag/node.html

[342ms +227ms] done!

In the above output you can see a number of phases. The main phases of building the site are

index, diff, build, and execute. Each of

these oprtations operates on an in-memory index. During indexing, every file in my

src directory is crawled and loaded into the index as a document. There's an

indexers object that has handlers for different file extensions. Markdown files

are loaded, parsed into tokens, and metadata is extracted such as title, tags, custom blurb,

hero image, and whether it uses a custom template or needs custom styles or scripts.

.hbs files are loaded and precompiled for render either as partials

(.partial.hbs) or page templates. Pretty much every other file is simply hashed

and stored as a path reference to be copied in the execute phase.

Each file, when crawled, is hashed, and I attempt to determine any dependencies. Markdown files generate both inbound and outbound dependencies – if the template used changes, we'll need to re-render, but also we may need to re-generate the index, rss feed, and any tag pages that would include the page. Templates have dependencies on any partials they use. All of this is represented in a dependency tree that is stored on disk between builds. Once all the source files are indexed, they are diffed against the previous file hash for that path in the on-disk index.

Once we have a list of changed tree items, they are then "expanded" into the full

affected set of tree nodes, which are then themselves hydrated into a list of tasks. Most

are simple, like "copy this file over" or "render this

markdown into using this template", but some are more complex. index is a

special tree node that creates the homepage index file, but re-generates my RSS feed as

well. tag nodes don't correspond to any actual files in the source tree but are

virtual nodes that render out to the tag listing pages.

Right now I only have two task types in the execute phase- render (markdown to

HTML with metadata) and copy. Those are run with some concurrency to not block

on file I/O. I render markdown using markdown-it with a few plugins to do

syntax highlighting, recognize frontmatter, and apply custom classes to passages of text. I

run all generated HTML through prettier so it's nicely indented for all my

fellow View Source Sickos out there.

Once all my tasks are complete, I write the new index to disk as JSON. Right now a full force-rebuild of the site takes <1.5s. Most re-builds are incremental and take less than 500ms.

During local development, all the generated files are serve using my (also home-rolled,

natch) static file server

tinyserve, which supports live reloading. To update my site I use rsync to just slorp

the built files up to my web host.

I'm very happy with the current state of things, as evidenced by the fact that this will be my second post in as many weeks! I'm not planning on open-sourcing the static site generator, not because it's particularly clever or proprietary, but it's kinda mushed together and functional and the code for the blog is in the same github repo as all my actual posts and unpublished stuff and I don't feel like splitting it out on an ongoing basis, but here's a link to a gist of the code as it stands at the time of this post

If you are in the market for a compact static site generator, may I recommend my pal Osmose's new tool Phantomake!